5.11. More Advanced Neural Networks#

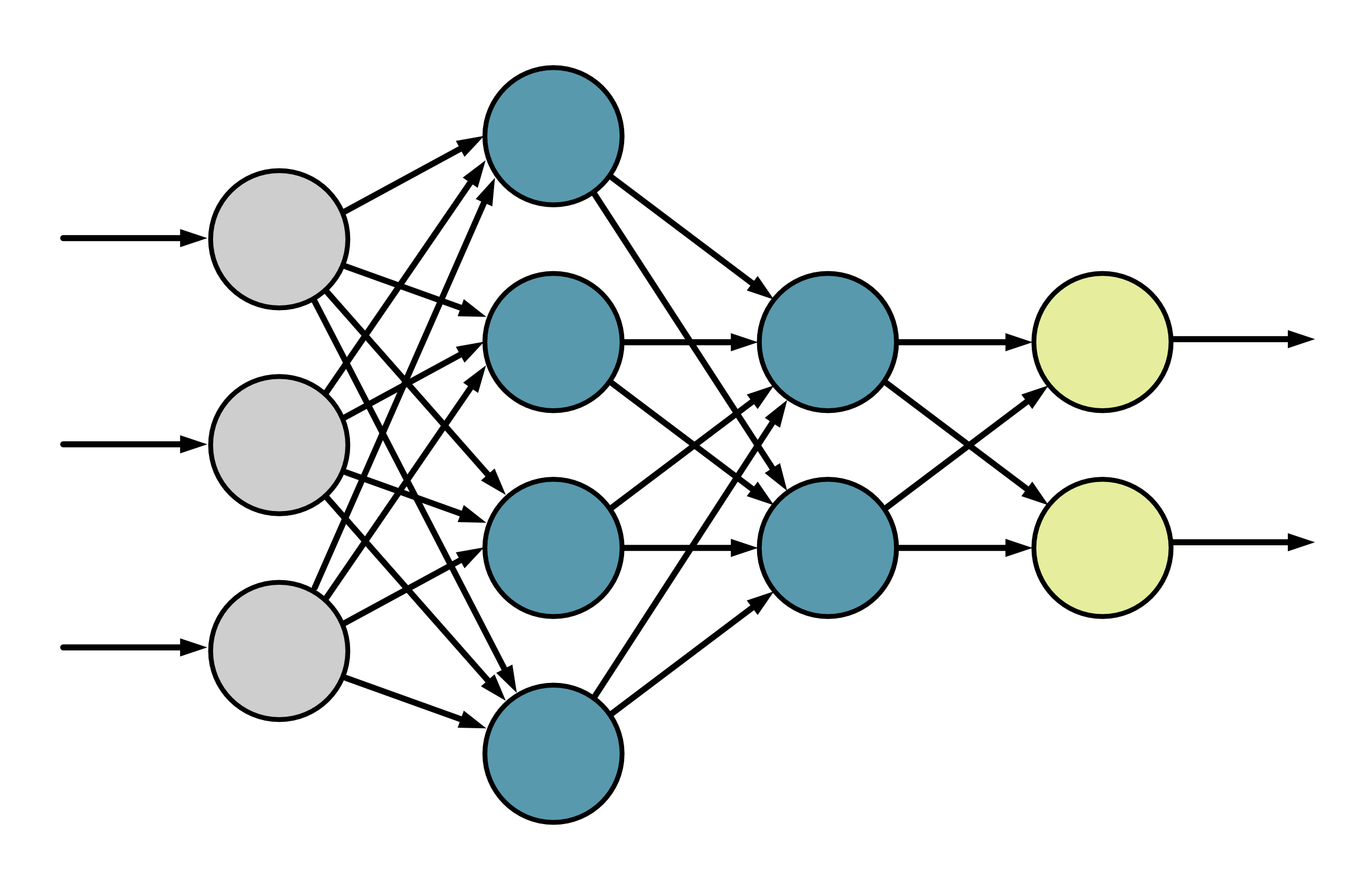

5.11.1. Dense Neural Network#

What we have looked at in this module are dense, fully connected neural networks, also called multilayer perceptrons. This is the simplest form of neural network. There are lots of other types of neural networks out there, all with different use cases. These are beyond the scope of this course but a few key examples are included here to provide a flavour of the different types.

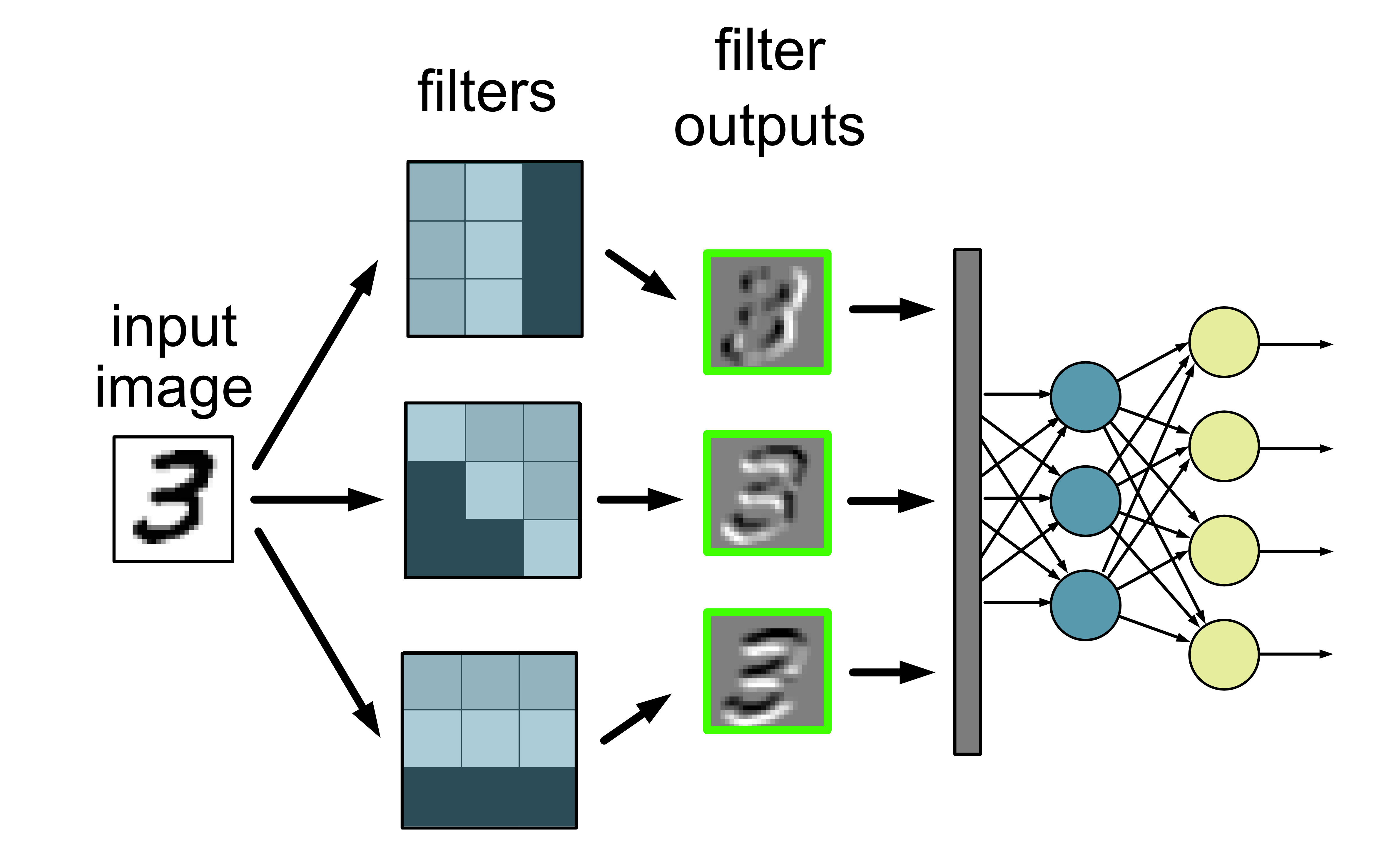

5.11.2. Convolution Neural Networks#

Convolutional neural networks are typically used when the input of the network is an image. Instead of neurons, filters are applied to the images via mathematical operations called convolutions. In addition, there are pooling layers that perform compression. At the end, a dense network layer is used to interpret the output.

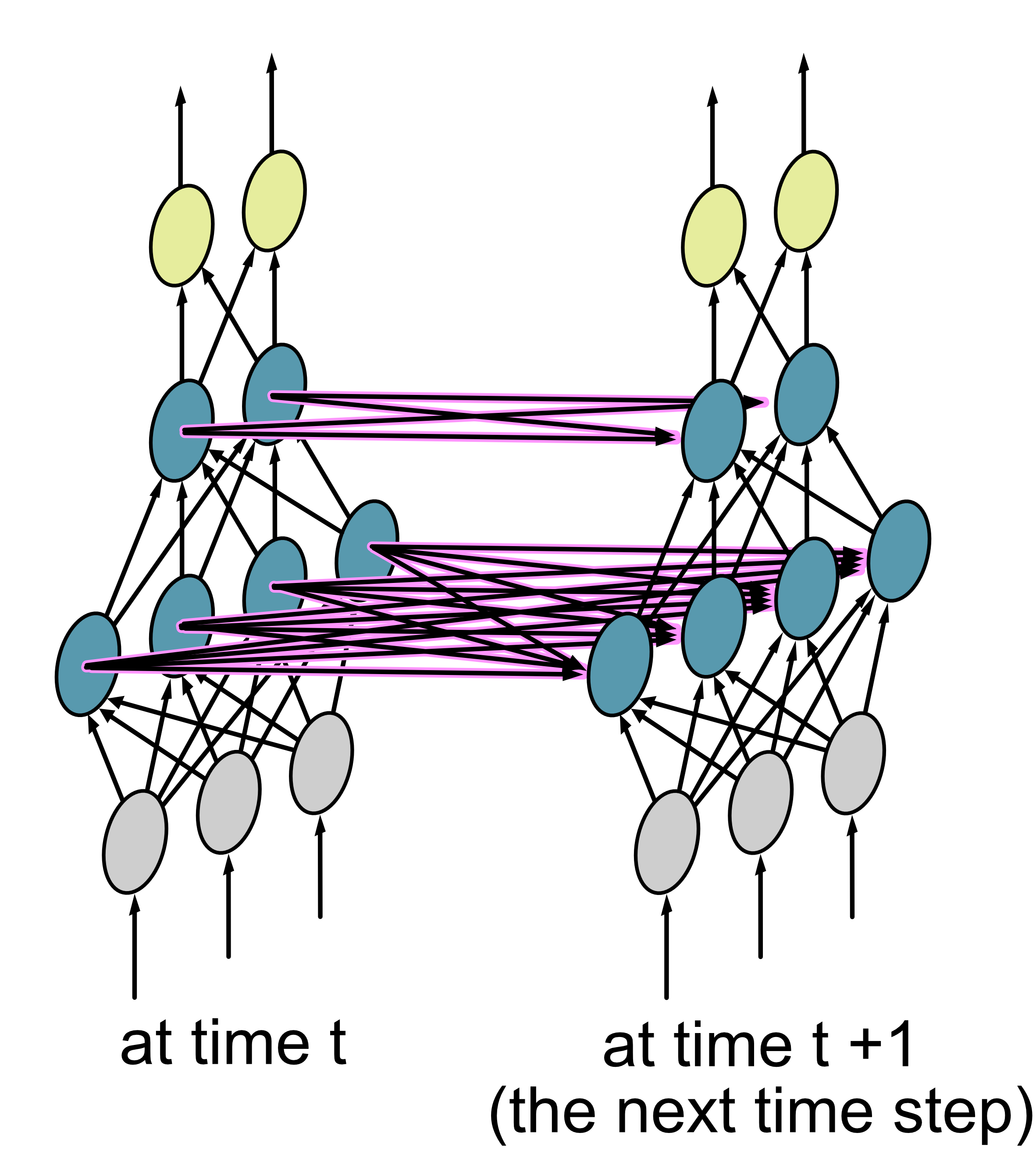

5.11.3. Recurrent Neural Networks#

Recurrent neural networks are designed to handle time series or sequential data. Unlike standard dense neural networks, RNNs can pass information from one time step to the next, allowing them to capture temporal patterns. More advanced versions of basic RNNs use Gated Recurrent Units (GRUs) or Long Short-Term Memory (LSTM) units, which are better at retaining information over longer sequences.

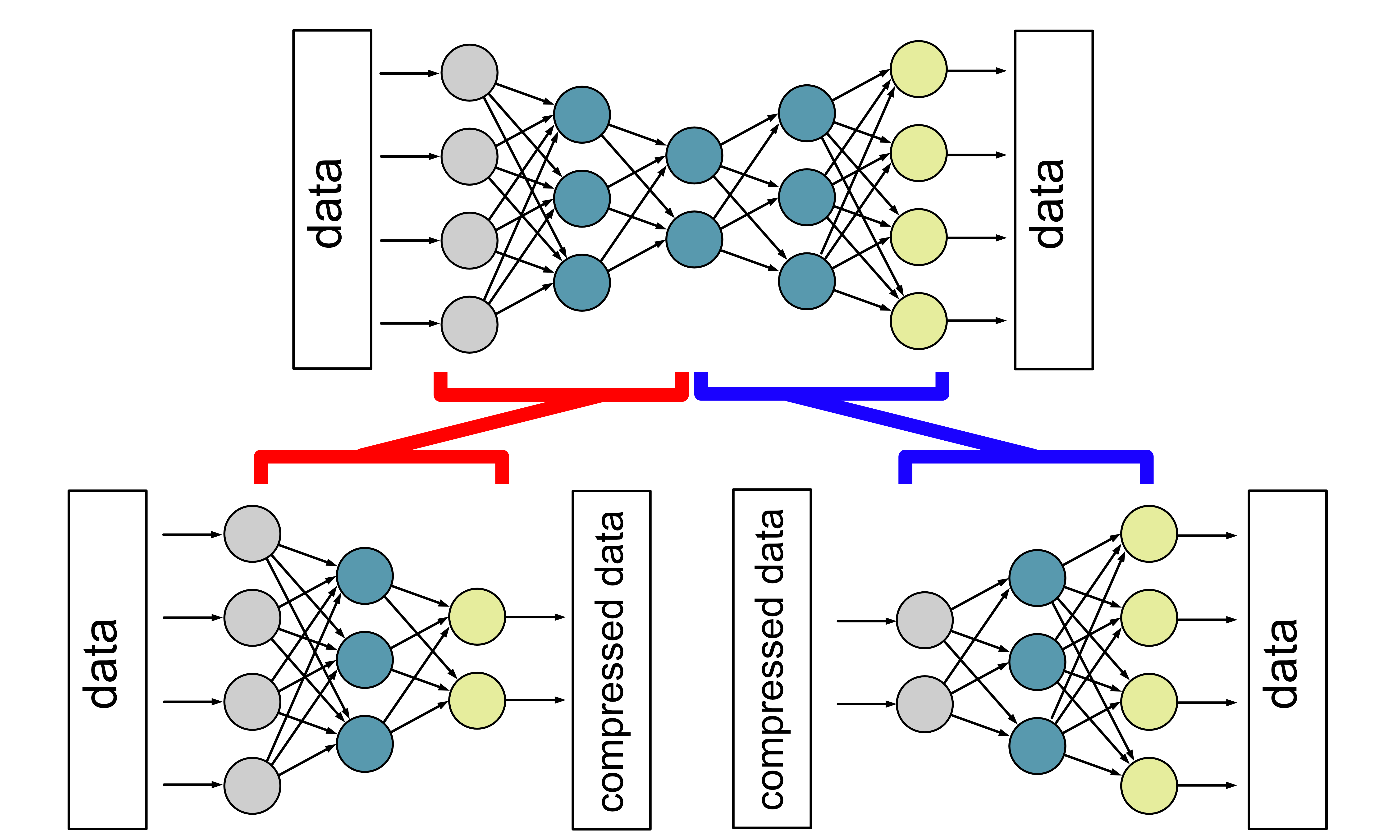

5.11.4. Autoencoders#

Autoencoders are dense neural networks with a bottle neck structure. These can for data compression in that the network tries to output the same information as the input. After training, the network is broken into two halves, where one is used or compression and one for reconstruction.

5.11.5. Generative Adversarial Networks#

The idea behind Generative Adversarial Networks is that two neural networks are trained in opposition to each other. One is the generator, which attempts to create realistic outputs, and the other is the discriminator, which tries to distinguish between real and generated data. For example, the generator might try to produce images of dogs, while the discriminator works to tell apart real dog images from the ones generated by the model. Over time, the generator improves its ability to fool the discriminator.

5.11.6. Transformers#

Transformers are used to process sequences of data, such as text, all at once rather than step-by-step. Instead of relying on recurrence, Transformers use a mechanism called self-attention, where each word in the input can focus on other words to understand their meaning in context. For example, in a sentence, the proximity of the word ‘bank’ to other words such as ‘river’ or ‘money’ is used determine whether it refers to a place or a financial institution. The network learns these relationships through multiple layers of attention and feedforward processing. Over time, the Transformer becomes better at capturing the structure and meaning of sequences, making it highly effective for tasks like translation, summarisation, and text generation.