5.6. Training a Neural Network#

At the moment our neural network isn’t very good. And you can see that it hasn’t performed well on the samples we have given it, the colours are completely different.

Sample |

Predicted |

Actual |

Error (Predicted - Actual) |

|---|---|---|---|

(150, 25, 150) |

(107, 32) |

(300, 71) |

(-193, -39) |

(50, 60, 180) |

(124.3, 38.8) |

(235, 57) |

(-110.7, -18.2) |

(250, 200, 170) |

(170, 50) |

(23, 89) |

(147, -39) |

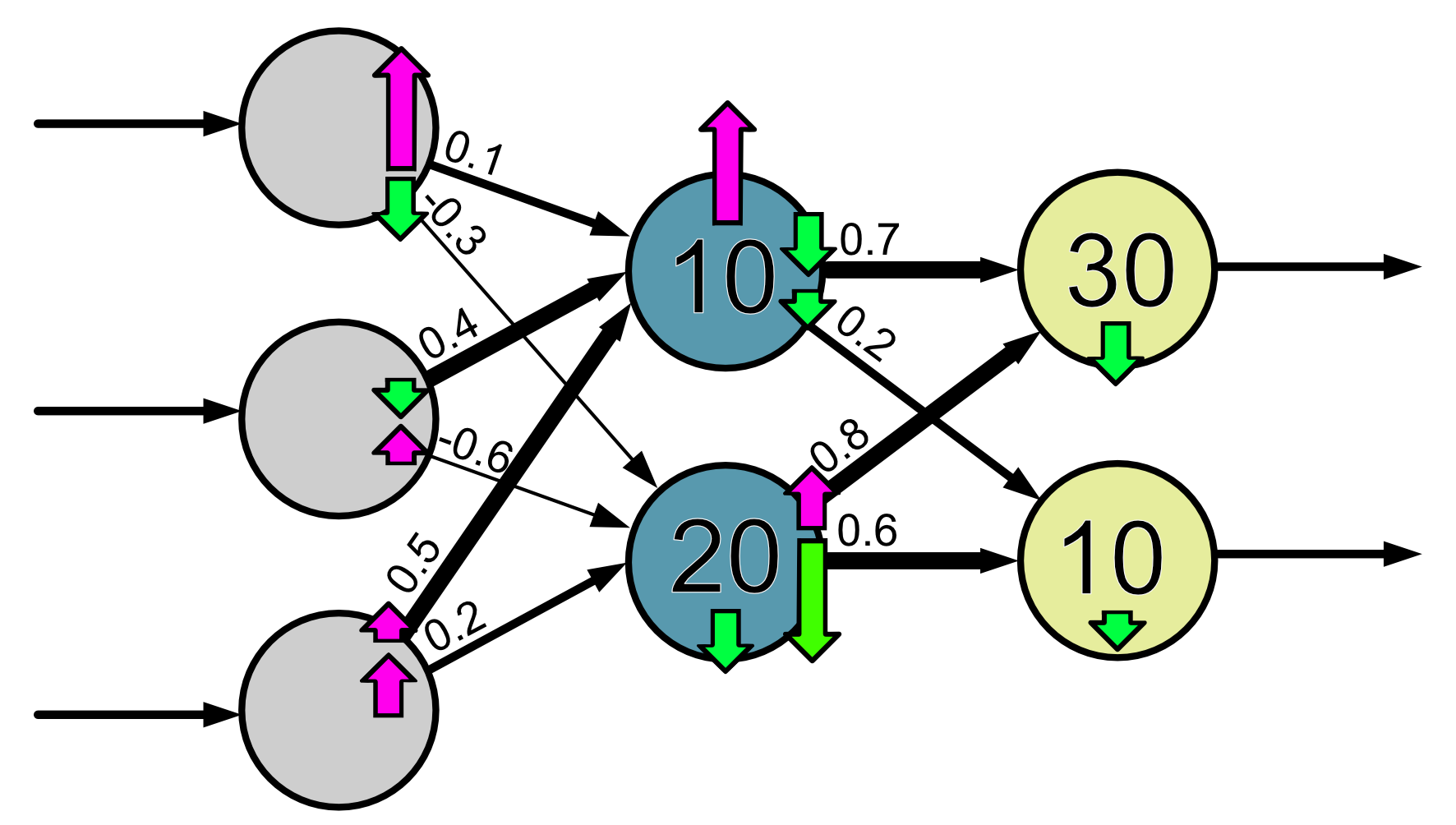

Currently the value of the weights and biases are random. This means our network isn’t likely to perform well! We should be able to improve the performance of our neutral network through training where we adjust the weights and biases based on training data.

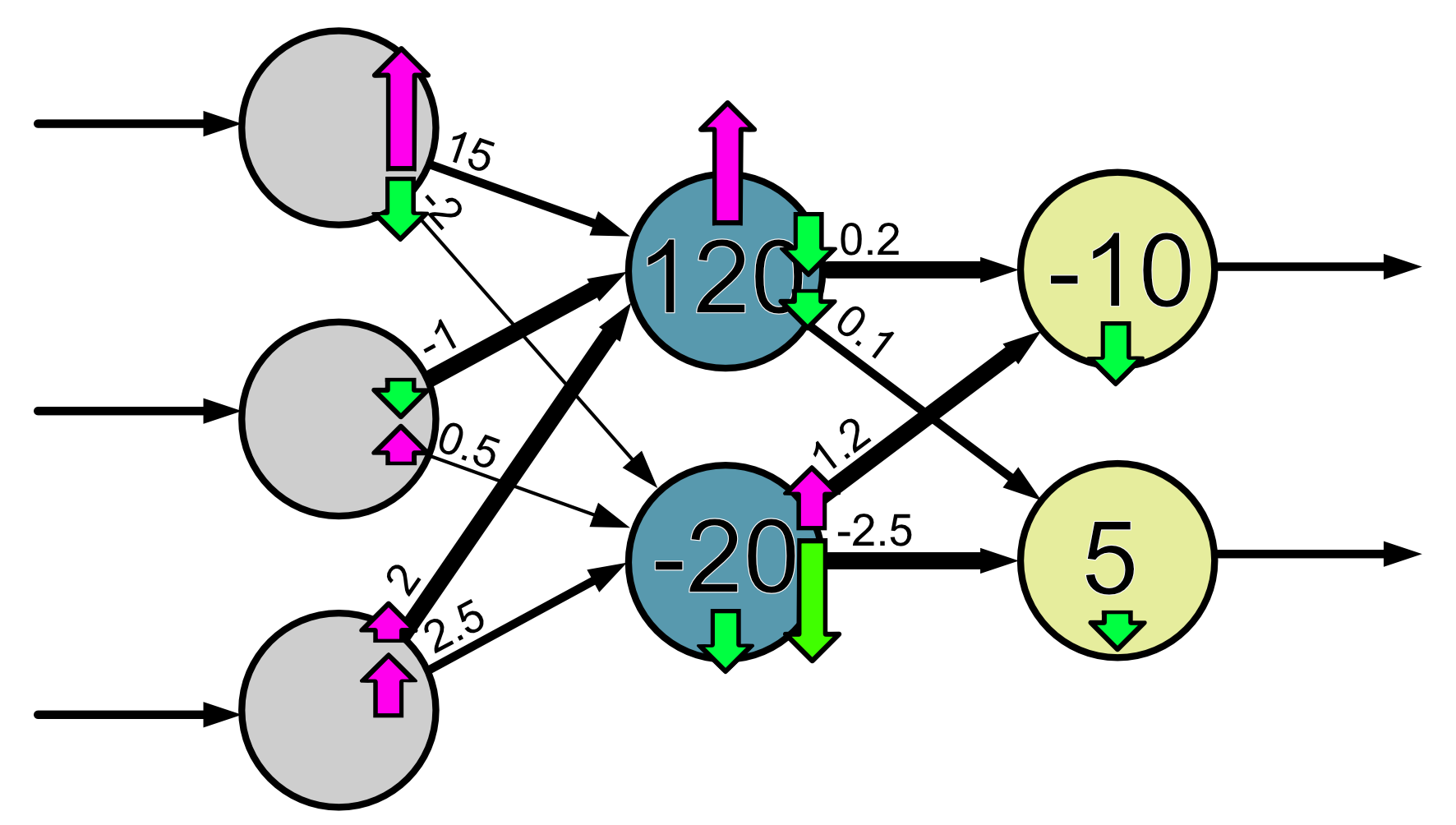

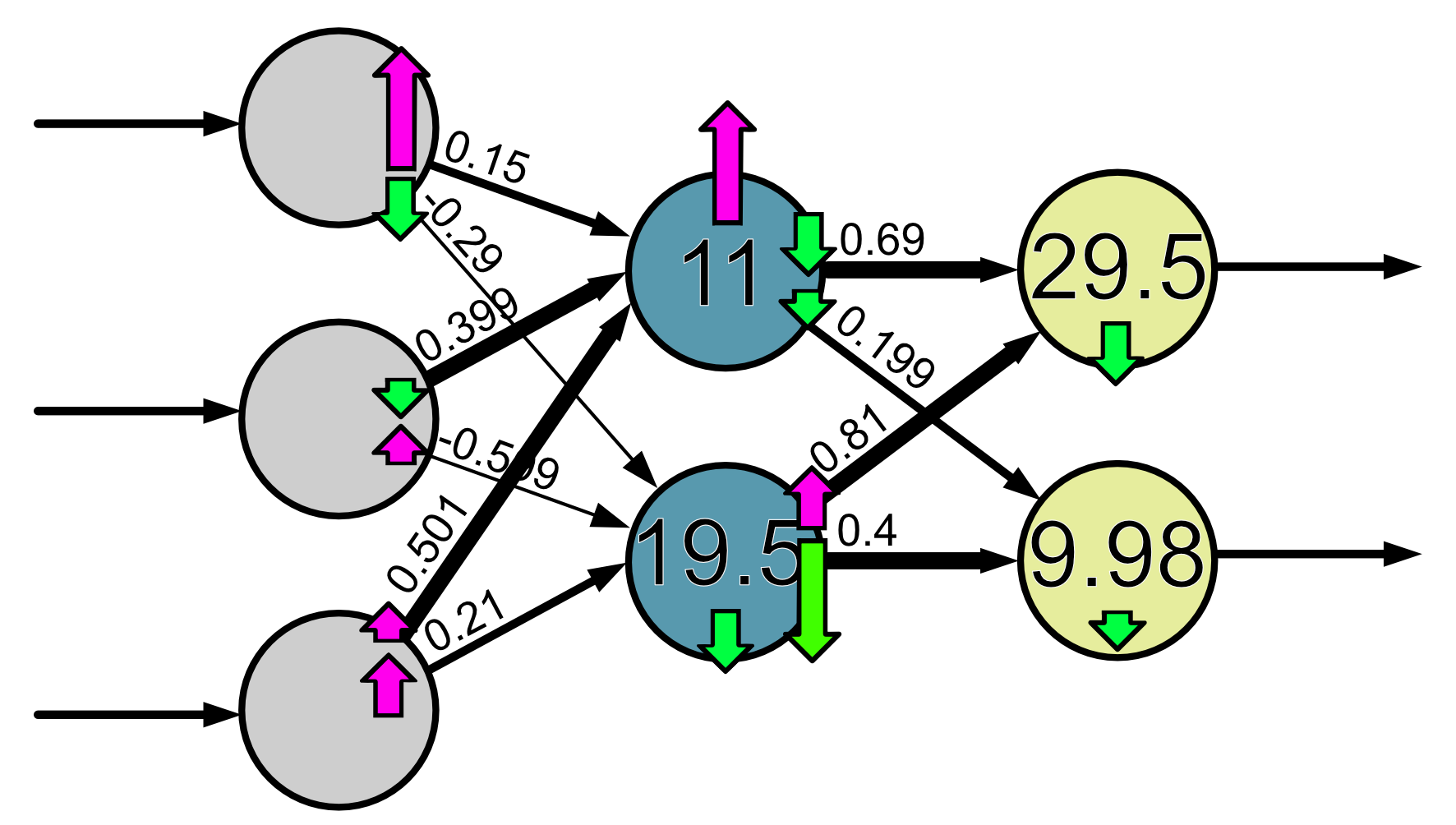

The way we train a neural network is through a process called back propagation. The maths is a little complicated but essentially after we see the training samples we can determine what ‘direction’ to update each of the values in the network, i.e. whether the values need to be increased or decreased. We also knows the relative amount these values need to change. For example it might know that one of the biases need to be increased by a lot, while the others need to be decreased by a smaller amount.

While we know the relative amount we increase/decrease values by, the overall amount will depend on the learning rate. If the learning rate is large, we increase/decrease values by a large amount.

If the learning rate is small, we increase/decrease values by a small amount.

The idea is we keep repeating this process over and over again, each time ‘tweaking’ the neural network so that the values are in the right direction and the network learns slowly over time. The number of repeats we do is called the number of iterations.

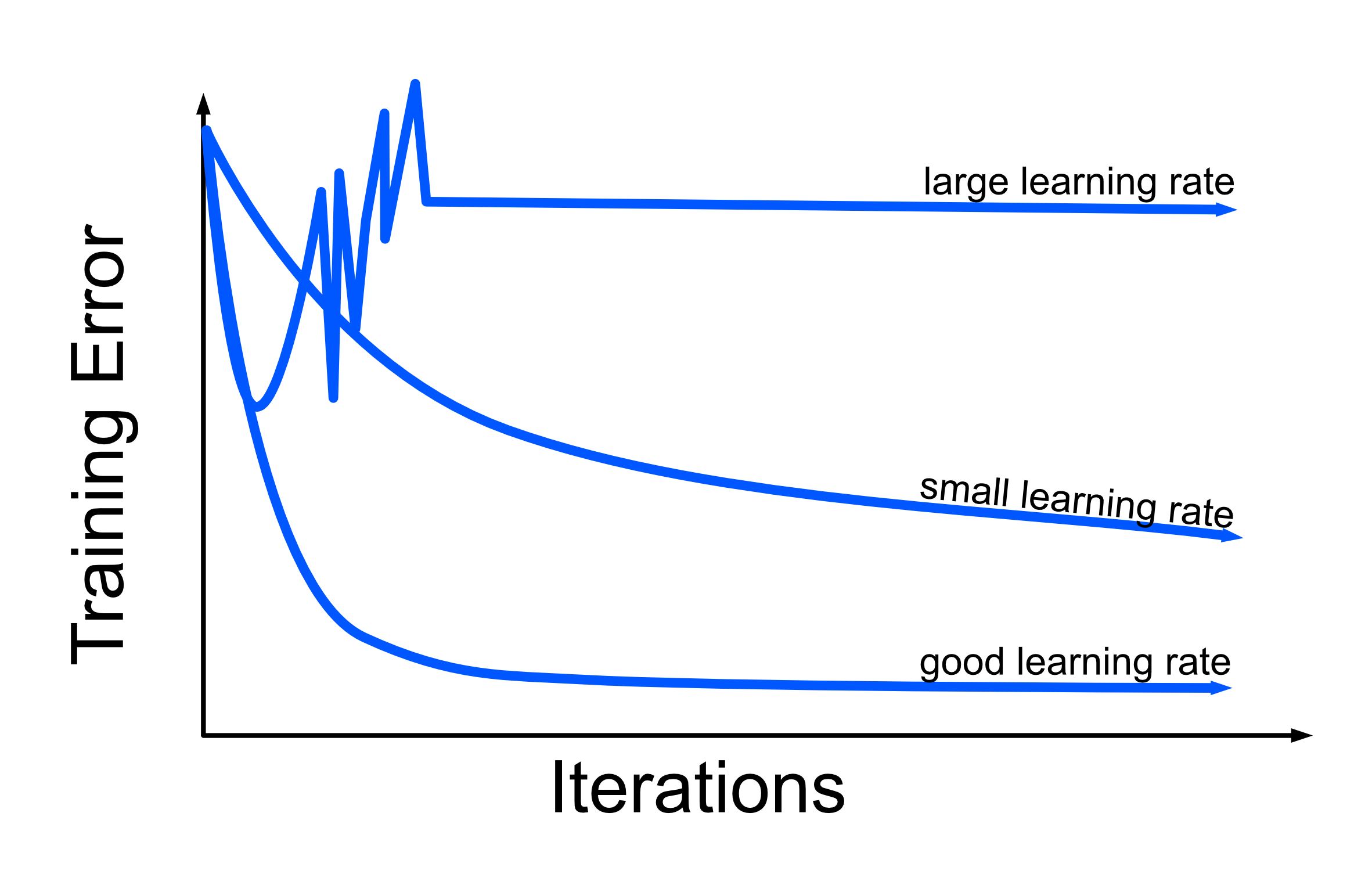

Picking a good learning rate is important. If you pick a learning rate that is too large, the neural network training can be erratic because you’re changing the values too much each time. If you pick a learning rate that is too small, the neural network training can be very slow and it could take the network days to learn instead of hours. One way to know whether the neural network is doing well is to look at how the error changes on the training data. Ideally the error (e.g. the mean squared error) should decrease slowly and smoothly. The learning rate will depend a bit on the problem you’re trying to solve, but typically learning rates are generally in the range 0.001-0.1.

Here is a sketch of how the training error might change for different learning rates. These curves are called loss curves.

Note that the curve for a high learning rate may take very different shapes depending on the network and the problem it is trying to solve. The main way to recognise it is that it doesn’t have a nice smooth curve towards a low error.