5.2. Neural Networks#

The brain is made up of billions of neurons that are connected and send information to each other.

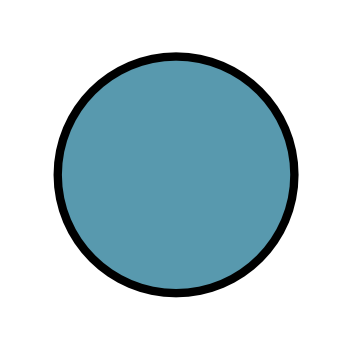

Thus, when computer scientists were developing artificial ‘brains’, they took inspiration from these neurons and developed neural networks. The most basic form of neural network is a dense neural network, which is sometimes also called a multi-layer perceptron (MLP). A dense neural network is comprised of lots of ‘neurons’ connected to each other that pass information through the neural network, i.e. the ‘brain’. In diagrams, we often represent neurons as a circle.

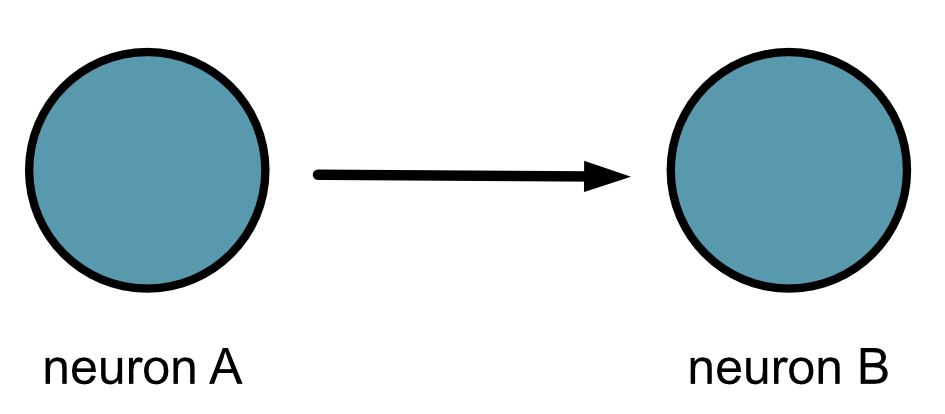

Connections between neurons are drawn as an arrow. The direction of the arrow shows the way that information ‘flows’. In the diagram below information can go from neuron A to neuron B, but information from neuron B cannot go to neuron A.

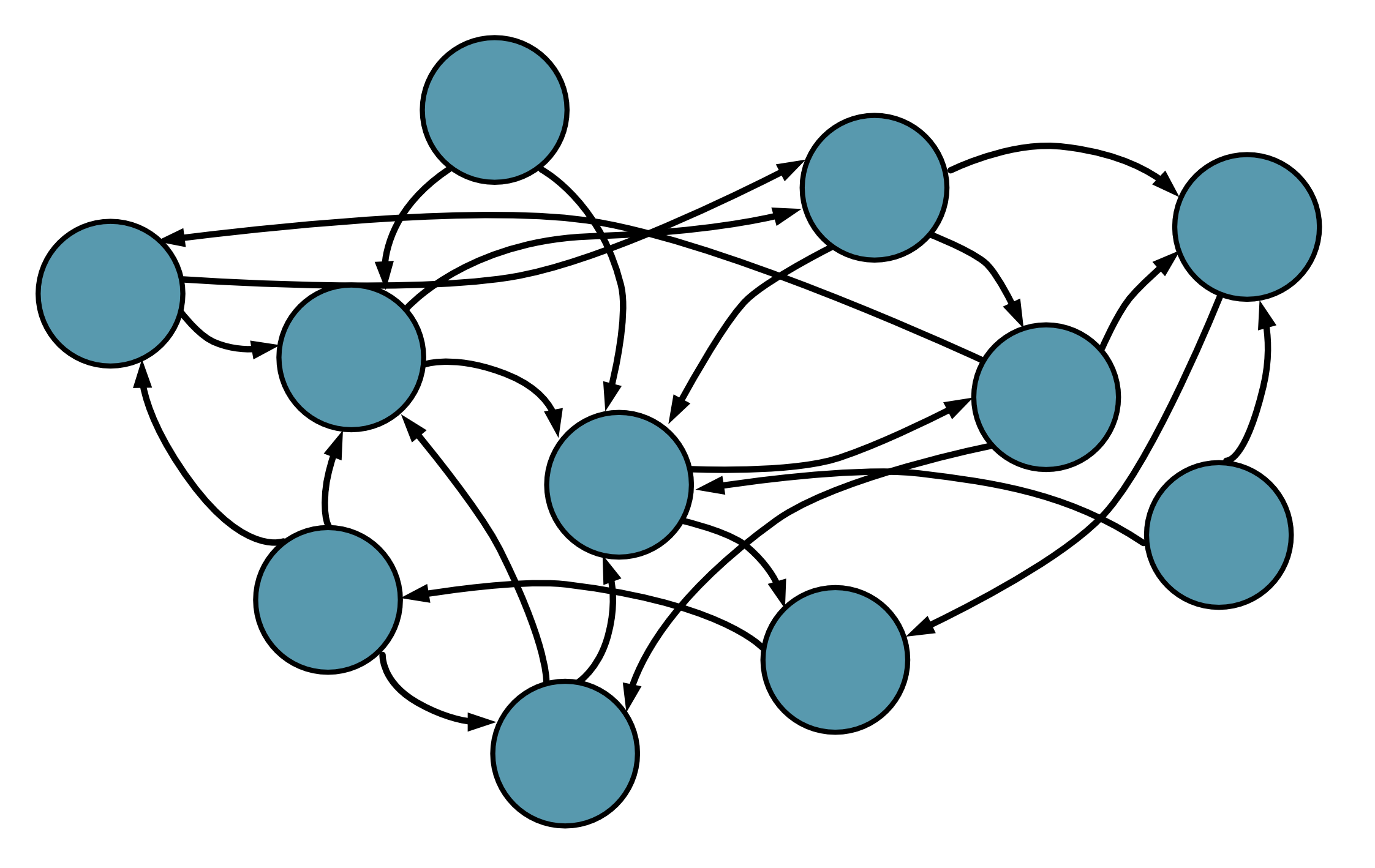

In a real brain, neurons would be connected in a complicated network.

But computer scientists like to keep everything nice and neat. So we organise neurons in layers. Information will flow from one layer to the next layer. In a fully connected network, every neuron in one layer is connect to every neuron in the following layer. In the figure below there are 3 layers of neurons and each layer as 4 neurons.

We also have a special layer called the input layer, which receives information and a special layer called the output layer, which returns the final result.

Networks can come in all different shapes and sizes.

Typically the larger the network the ‘smarter’ it is, but the tradeoff is that larger networks are slower to ‘learn’, i.e. train and slower to ‘think’, i.e. make a prediction.